How to think about machines that think

The important question is not "What is artificial intelligence capable of?" the important question is "How much will it cost?"

How to think about machines that think

Last week Open AI announced Sora, a new text-to-video engine, and kicked off another round of frenzied discourse about the meaning and future of artificial intelligence. I’ve written about AI twice before, once about how AI will change the world and then again about how AI will not destroy the world. Here I’d like to explore in a little more detail how I think AI will change the world.

Most of the public discourse around AI implicitly frames artificial intelligence as a kind of exotic alien species and then focuses on comparing that hypothetical species to humans to describe its capabilities or speculate about its motives. To be honest, I don’t think that’s a particularly helpful way to reason about AI. For one thing, what does "human performance" even mean? Does the human capacity for chess refer to Magnus Carlsen or to a "typical" chess player?1

For another, what kind of artificial intelligence are we comparing ourselves to? Has it been trained on one specific task, or many different tasks? GPT-4 scores in the ~90th percentile on the LSAT but doesn’t understand the rules of chess and tends to cheat. Stockfish can beat grandmasters at chess but chess is the only thing it can do. Should we measure AI performance by comparing Stockfish to Magnus Carlsen? GPT-4 to a median high school student?

But the most important problem with imagining artificial intelligence as a separate species competing with humanity is that (at least so far) AI doesn’t do anything without human input. The output of an LLM (or any AI system) is actually the combination of the artificial intelligence of the tool and the human intelligence of the person wielding it. There is no way to define 'artificial intelligence' in isolation from humans because none of the artificial intelligence we know how to build does anything when isolated from humans. AI as we know it today is purely reactive.

Consider for example trying to measure how "good" an AI image generator is at creating a picture of a butterfly. How creative is the human operator allowed to be with their prompt? How many images can they create? How much curatorial judgement should they exercise in selecting the final output? The only way to make a general statement about "AI art" is to ignore the fact that a human was the final authority over every piece of AI art ever made. The person choosing which output to consider and in what context is what makes the image art!

Trying to distinguish between the skill of the person prompting and selecting the images from the "skill" of the image generator itself is like trying to distinguish between the skill of a Nascar driver and the "skill" of her vehicle. The tools really do transform what is possible! But it isn’t useful to think of cars as "competing" with human sprinters and it isn’t useful to think of race car drivers as "sprinters who cheat." Both AI image generators and race cars enable humans to go faster than they could alone — but neither go anywhere unless someone is steering.2

Rather than thinking of artificial intelligence as a potentially hostile alien species I think it is much more accurate and useful to think of artificial intelligence as a suite of power tools for the human mind. It was once the case that construction was the natural domain of the people who were physically strongest — but the cheaper and more widespread power tools became, the less physical strength mattered. We should expect AI to commoditize intelligence in a similar way.

The interesting question is not whether artificial intelligence can surpass human capacity in any particular task. It absolutely, definitely can. At time of writing artificial intelligence has beaten the outer limits of human skill in backgammon (1977), checkers (1994), chess (1997), Jeopardy (2011), go (2015), poker (2015) and more recently Stratego (2022). Artificial intelligence is better than humans at protein folding, diagnosing breast cancer from x-rays, and managing the power grid. For any task where performance can be measured, AI will eventually outperform humans.

More precisely, AI-assisted humans will outperform humans who don’t have (or choose not to use) AI tools, in the same way that tool-assisted humans outperform humans who don’t have (or choose not to use) mechanical tools. John Henry will lose to the man with the drilling machine. Hiring someone who doesn’t use AI will be like hiring a woodworker who doesn’t use power tools — not necessarily pointless but more of an aesthetic choice than a practical one.

On the other hand, the existence of power tools has not obsoleted human labor. Humans are remarkably adaptable and efficient, so we are good at filling in the awkward gaps between optimized tooling. We should expect similar patterns to emerge from intellectual power tools: frequent, well-defined tasks will be delegated to carefully optimized tools and unusual/ambiguous tasks will require the attention of the skilled craftspeople who wield the tools.

The most interesting question of our era is not "Will artificial intelligence surpass human intelligence?" in the same way that the most interesting question of the industrial revolution was not "Will mechanical strength surpass human strength?" Of course it will! The interesting questions are how much will this new solution cost and how that cost will reshape the economy.

"In the past, jobs were about muscles.

Now they are about brains.

In the future they will be about heart."

- Minouche Shafik

We have a tendency to assume that AI will replace humans at any task that it is capable of accomplishing — but that’s obviously not true in the limit. We have the technology to build mechanical tools that can accomplish any physical task more precisely and reliably than a human ever could, but there are still lots of physical tasks that we use humans to accomplish. Building a machine for every physical task would be needlessly expensive and complicated — the same thing will be true for the digital machines we build to handle intellectual tasks. The right question is not "What tasks will AI be better at than humans?" the right question is "What jobs will AI be able to perform more cheaply than humans?"

Right now the cost of AI services is heavily subsidized by venture capital competing to capture media attention and market share. The technology is still being developed, which means the true cost of building and operating artificial intelligence tools is not well understood yet, even by the experts. We also don’t yet know the most valuable ways to use artificial intelligence, so we don’t know what kind of prices AI providers will be able to charge or what business models will make sense.

For example, I think it’s quite possible that artificial intelligence turns out to be incredibly important without ever being particularly profitable. Maybe freely available open-source alternatives will be "good enough" and the AI market will turn out to be like Craigslist or Wikipedia where profit-agnostic competitors drive the market price of a valuable service down to free. Maybe AI will be so incredibly productive it will drive down the price of everything! Personally, I would be in favor of that.

On the other hand, maybe AI will end up being critical for military planning and governments of the world will nationalize the world’s computing supply, leaving only a trickle of resources for the civilian economy. Maybe we are living through a brief, beautiful moment when it is possible for normal people to use AI frivolously, before it is monopolized by the powerful. Personally, I would be less in favor of that.

We don’t know yet what AI is useful for or how much it will cost — but there are still some reasonable predictions we can start to make. It seems pretty likely that a general purpose AI chatbot / image generator will be available to ~everyone for ~free. That’s probably not the most powerful form that AI technology will take, but it is already powerful enough to replace the majority of human labor in translation, transcription, summarization, stock photography, basic graphic design and copywriting.

On a slightly longer timeframe I expect accountants, paralegals, research assistants, data analysts and consultants will also be replaced by AI. Doctors, lawyers, engineers and programmers will probably still exist but their jobs will be very different. In many cases their actual responsibility will move from producing answers to reviewing the answers produced by AI and signing off on it. The jobs of the future may be less about performance and more about accountability.

People tend to assume that if artificial intelligence eliminates a particular job (or makes it radically more efficient) that there will be fewer jobs to go around — but I don’t think it’s that obvious. Perhaps if doctors are simply reviewing diagnoses instead of making them we will need fewer doctors — or perhaps cheaper medical care will allow so many more patients to seek treatment that we will need more doctors. Perhaps we will need fewer doctors, but many more people working on training new medical AI models.

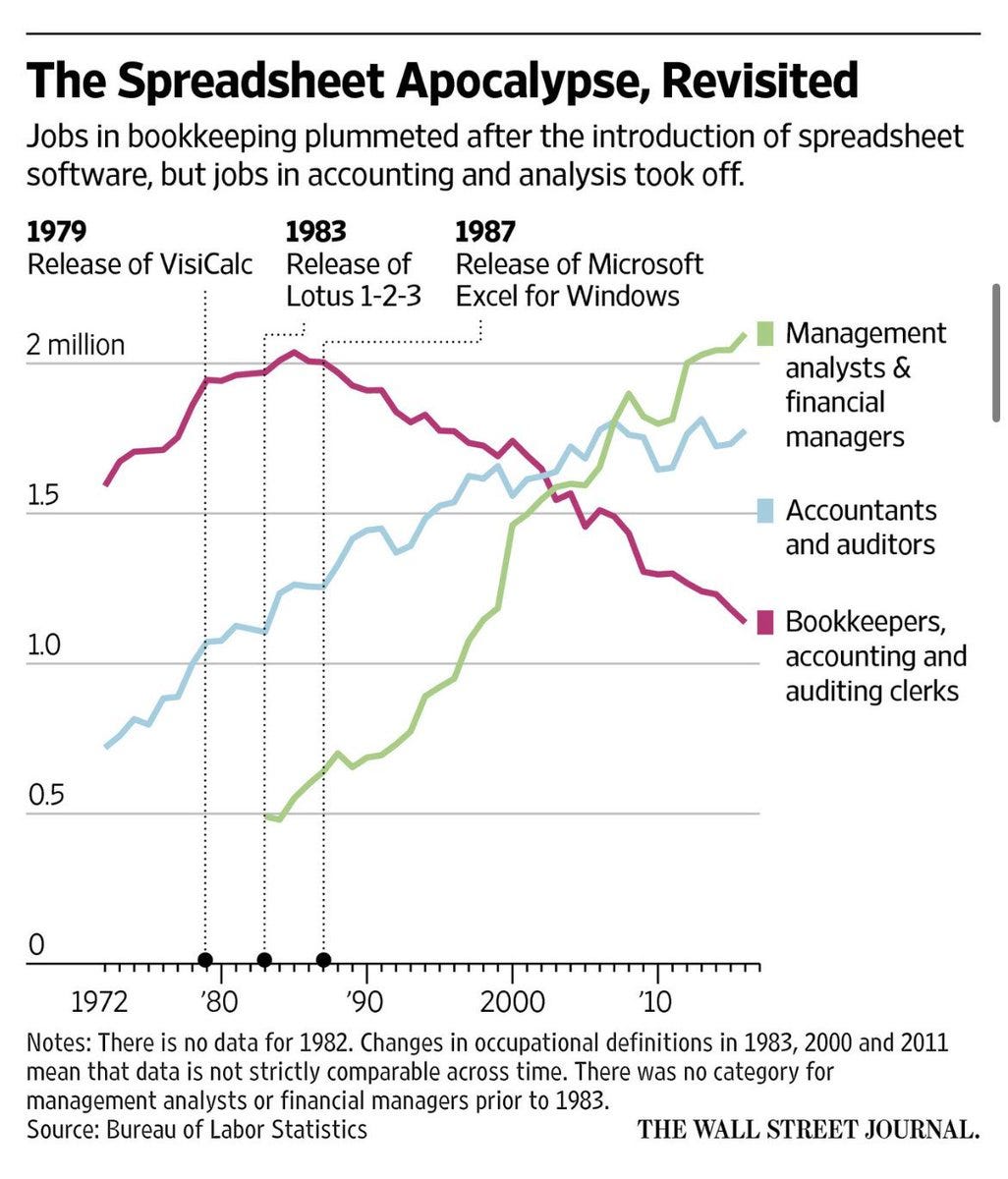

People once worried that computer spreadsheets would destroy the bookkeeping industry — and they were right! Bookkeeping jobs started to vanish shortly after the release of Lotus 1-2-3 and Excel. But the worry was misplaced, because bookkeeping jobs were replaced by a surge in demand for financial analysts now that financial data was plentiful because bookkeeping was cheap. Data entry jobs disappeared, but data management jobs emerged to replace them. Human attention moved away from low-level details and towards higher-level plans. Spreadsheets were bad news for bookkeepers specifically, but great news for the economy overall.

This kind of economic disruption is obviously still chaotic for the people who live through it — the notion that someone, somewhere got a better job as a financial analyst is cold comfort to someone who just lost their job as a bookkeeper. It’s understandable that people resent a technology that threatens their way of life. The emergence of artificial intelligence will likely be even more turbulent than past technological revolutions because it is unfolding faster and affects almost everyone.

Artificial intelligence is also uniquely disruptive because it poses the greatest threat to (relatively) wealthy and high-status knowledge workers — highly enfranchised members of society who have the means and motivation to fight back. If AI radically increases productivity that will be good news for investors (because it will lower business costs) and for the poor (because it will lower prices) but possibly very bad news for the upper middle class, because their salary is the cost being eliminated.

I think one of the reasons AI doomerism gets so much attention (even though it is mostly silly) is because it is easier to speculate about sci-fi horror stories than to confront the very real challenges that artificial intelligence will actually create. The meaning of art and storytelling will forever change. Our systems of law and governance will need to be rebuilt to handle our newfound power. We will need new tools to identify and agree on the truth. And while we are collectively stumbling through these lessons, lots and lots of people will lose their jobs.

Even if the new world we are building is a good one (and FWIW I think it will be) many lives will still be lost (or ruined) in the transition. We invented the car (1885) almost 75 years before we got around to inventing the modern seat belt (1959). We won’t start learning how to solve the problems of artificial intelligence until it has already started causing the problems we will need to solve. The time between when artificial intelligence first arrives and when it has been fully integrated into society will be painful. We should start planning for it now.

I am working on two more posts in this series for paid subscribers. The first will focus on how artificial intelligence will impact human creativity (Don’t be afraid of infinite beauty) and the second will focus on how artificial intelligence will impact the pursuit of truth (The steep cost of cheap lies). If you are interested in either of those topics, consider subscribing. If you are not interested in either of those topics, consider subscribing ironically, to confound the algorithm.

Either way, expect crypto-focused coverage to continue in the meantime.

Is the human player allowed to use a buttplug?

Yes, I am aware that artificial intelligence can drive cars. Even for self-driving cars a human is choosing the destination. The steering wheel is just a keyboard now.