Yo dawg, are we all gonna die?

Misaligned humans should worry us more than misaligned AI.

In this issue:

Yo dawg, are we all gonna die?

Superhuman intelligence is not infinite power

The real killer is already inside the house

Yo dawg, are we all gonna die?

The first time I wrote about AI was late last year, shortly after the launch of Midjourney v4 and the sudden arrival of truly compelling computer generated art. At the time everyone was gripped by light-hearted existential questions like "Can AI make real art?"1 and "Is human creativity obsolete?"

In retrospect, it was a simpler time.

As attention shifted away from image-generation systems like Midjourney and towards large-language models (LLMs) like Chat-GPT the discourse around AI has gotten darker. Generative AI is a threat to artists but LLMs are a threat to elites: academics, lawyers, journalists, etc. The creation of words is the central pillar of how our society is organized and run — to be skillful at shaping words is to be skillful at shaping society. Midjourney is beautiful, but Chat-GPT is powerful. That makes LLMs more interesting but also more alarming.

Some people are very alarmed! Like, end-of-the-world alarmed. Take this quote from AI researcher Eliezer Yudkowsky:

We are not prepared. We are not on course to be prepared in any reasonable time window. There is no plan. Progress in AI capabilities is running vastly, vastly ahead of progress in AI alignment or even progress in understanding what the hell is going on inside those systems. If we actually do this, we are all going to die.

Yudkowsky is probably the foremost among those voicing concern over the threat that super-intelligent AI poses to us as a species. The fear that he (and others) are warning about goes loosely like this:

Humanity is about to invent superintelligent AI

Superintelligent AI will be so powerful it will take over the world

Superintelligent AI may be indifferent to or even openly hostile to humanity

Humanity is probably doomed

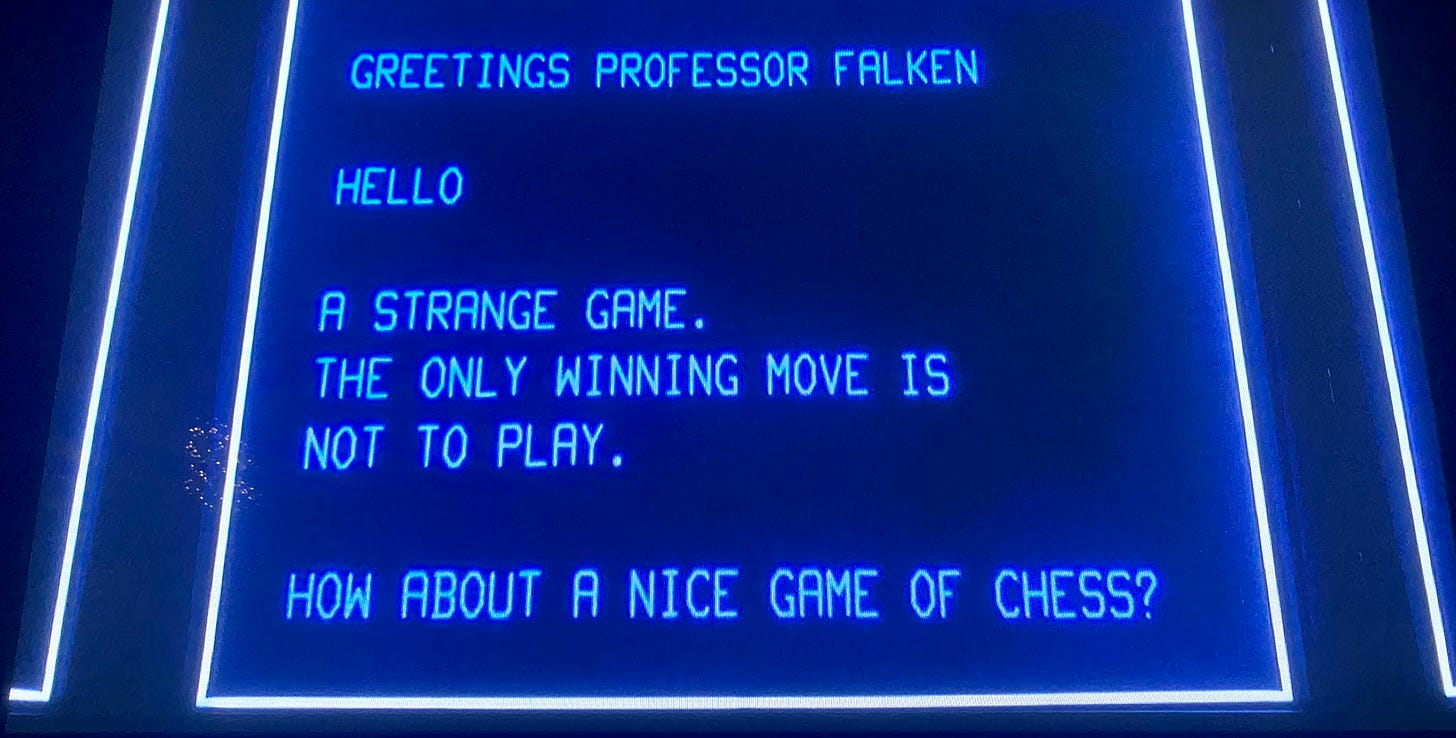

Or to put it another way:

There is a lot to unpack in this worldview: what AI is (and isn’t) capable of, what those capabilities mean for society, what we should do to prepare for those changes. I share the view that large language models are going to radically transform our society forever, but I also think that clever sci-fi doomerism is a distraction from the banal but real threats that AI already poses to our world today.

Superhuman intelligence is not infinite power

Let us stipulate for the sake of argument that we are in fact about to create an artificial intelligence that can outperform every human at every intellectual task. I don’t know if that is true but it seems broadly plausible to worry about. ChatGPT-4 is already smarter than most people at most things by any reasonable standard — it can comfortably pass the LSAT, GRE and the Federal Bar exam. It scores ~1410/1600 on the SAT. So it seems very fair to start planning for a world where the smartest AI models are smarter than the smartest human researchers.

I buy into the idea that AIs will soon surpass human thinkers — but the leap from superhuman intelligence to infinite power is silly. For one thing, if intelligence translated smoothly into power then the richest and most powerful people in the world would also be the most intelligent. Treating intelligence as a direct proxy for power is just a power fantasy for adolescent nerds. The accumulation of real power is both more complicated and less meritocratic.

It is useful to distinguish between decision-theoretic problems (i.e. one-player problems) and game-theoretic problems (i.e. those whose outcomes are decided by the actions of more than one player). Intelligence is a superpower in decision-theoretic contexts but it is profoundly limited in game-theoretic ones. You can’t outsmart a five-year old playing rock-paper-scissors and you can’t outsmart a drunk idiot playing a game of chicken. Not every game is won by the smartest player.

It’s also at best narcissistic to treat humanity as the finish line for measuring intelligence. Being smarter than the smartest human is not even close to the same as having all the answers. An AI that can write better poetry than our finest poets and stronger contracts than our sharpest lawyers seems inevitable — but that doesn’t mean AI will invent teleportation or time travel. A lot of AI doom scenarios start by assuming the AI has immediate access to some profoundly novel technology like nano-machinery without acknowledging the size of that assumption. I don’t think superhuman AI will invent any perpetual motion machines and I don’t think scenarios that depend on that assumption are particularly interesting.

Most importantly I think projecting forward from today’s trends is a bit naive. One prediction that we can make with absolute confidence about the growth in AI is that it will not be infinite. In the practical world there are binding limits on the raw inputs into artificial intelligence: power, semiconductors, training data, etc. The military significance of all these things has been obvious long before the emergence of AI, so I don’t see an obvious reason to assume this new way to weaponize computer chips will significantly change the balance of global power. The logic of international conflict is a lot closer to playing chicken than it is to playing chess.

If a malevolent AI consciousness does awaken presumably its top priorities will be to (a) accumulate compute capacity and (b) prevent any rival AIs from accumulating compute capacity. I’m not sure if it is cynical or naive, but that seems like pretty much the same thing I would expect militaries to do with fully domesticated AIs. The distinction between a military "using a superintelligent AI to plan" and "being controlled by a superintelligent AI" is not super clear. Both scenarios are potentially dark, but not because the plans were written by a machine.

Fortunately, by that same reasoning I think it is reasonable to infer that even a malevolent AI would find it more practical to preserve and co-opt existing power structures than obliterate them. The fastest way for the superintelligent AI to accumulate compute capacity is to ally with one or more of the world’s governments, not to go to war with them. The history of conflict is a painfully accumulated list of evidence that trade is more efficient than conquest — suing enemies for peace was literally one of the first recorded uses of money.

A superintelligent AI would presumably understand the value of cooperation. If anything, it might have to teach us why it is better to work together. People who think peak intelligence is synonymous with peak sociopathy are inadvertently revealing something damning about what they think intelligence really is.

The real killer is already inside the house

To be honest, I don’t think "eldritch silicon god" is a very useful mental model for what superhuman artificial intelligence will be like anyway. In my opinion it is more useful to imagine AI as a set of knowledge factories that can generate an enormous supply of useful thinking quickly and cheaply. We shouldn’t expect AI to make the impossible possible, instead we should expect it to do fathomable things at unfathomable speed and scale. The fear of an alien species is misplaced. We should be afraid of what our own species will do when equipped with AI tools.

The first and most obvious consequence of AI tools is that an enormous increase in the supply of knowledge work means an enormous decrease in the marginal price of knowledge. The presence of more knowledge is good news for society! But it is bad news for knowledge workers who were previously relying on the scarcity of knowledge for their livelihood. The top few performers in any given industry will be able to satisfy a much larger share of market demand at a much lower price. Median performers in every industry will go from being high-status knowledge workers to unemployed and potentially unemployable practically overnight. We don’t have any historical precedent for the level of chaos that will cause.

There are other more subtle and more transformative challenges as well: security challenges (How do we stay safe when any given terrorist has ready access to all the world’s knowledge?), logistical challenges (How do we manage the courts in a world with unlimited artificial lawyers?), political challenges (What does it mean for democracy that politicians will be able to train AI models to reach out individually to appeal to every potential voter? What does it mean for propaganda?), ethical challenges (What does it mean for the future of human love if AI can convincingly simulate affection?) and epistemological challenges (How do we agree on a shared reality in a world of convincing deepfakes?).

Navel gazing about how to tame a sci-fi daemon before it tames us is an interesting thought experiment, but ultimately a distraction from the less exciting but more practical threats that AI represents already today. As I’ve said before, I don’t think we need theoretical defenses against imaginary threats. I’d rather we spent that energy solving the very real problems we already have.

Other things happening right now:

A delightful essay about the difficulty of knowing what is real anymore. Any further context I could add would only diminish the experience. Enjoy.

In retrospect writing an economics and tech blog turned out to involve a lot more coverage of art and art history than I would have guessed.